Continue crunching 5.06 Ralph??

Message boards : Current tests : Continue crunching 5.06 Ralph??

| Author | Message |

|---|---|

feet1st feet1stSend message Joined: 7 Mar 06 Posts: 313 Credit: 116,623 RAC: 0 |

I haven't found any posts indicating if we should cancel any remaining Ralph WUs or if their results are still going to be useful. When you cut a new version, I always abort my old Ralph WUs, sometimes even when they're in progress, and I don't report them on the aborted thread, because I figure you can see by date/time and release that they don't need to be studied... is that the right thing to do? Can we start a thread for Ralph instructions for use? Or something with guidelines? |

feet1st feet1stSend message Joined: 7 Mar 06 Posts: 313 Credit: 116,623 RAC: 0 |

I see more WUs were produced for Ralph, but application version still shows 5.06. I'm not seeing any discussion on what the objectives are for this. |

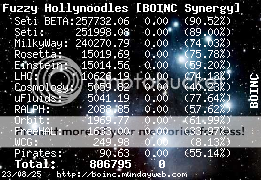

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 19 Feb 06 Posts: 37 Credit: 2,089 RAC: 0 |

I had these two in my cache at the same time: Rosetta: AB_CASP6_t216__458_3090_0 https://boinc.bakerlab.org/rosetta/result.php?resultid=18451899 CPU time 11195.640625 stderr out <core_client_version>5.4.6</core_client_version> <stderr_txt> # random seed: 2184411 # cpu_run_time_pref: 14400 # DONE :: 1 starting structures built 30 (nstruct) times # This process generated 3 decoys from 3 attempts </stderr_txt> Ralph: AB_CASP6_t198__451_25_1 https://ralph.bakerlab.org/result.php?resultid=99001 CPU time 6211.5 stderr out <core_client_version>5.4.6</core_client_version> <stderr_txt> # random seed: 3081944 # cpu_run_time_pref: 7200 # cpu_run_time_pref: 7200 # DONE :: 1 starting structures built 5 (nstruct) times # This process generated 5 decoys from 5 attempts </stderr_txt> The difference may lie in my Target CPU run time, as I have Rosetta: Target CPU run time 4 hours Ralph: Target CPU run time 2 hours Explanations, anybody? [color=navy][b]"I'm trying to maintain a shred of dignity in this world." - Me[/b][/color]

|

anders n anders nSend message Joined: 16 Feb 06 Posts: 166 Credit: 131,419 RAC: 0 |

I had these two in my cache at the same time: You are right it is your Target time that ends the 1st one. No 2 asked for 5 runns and was reported when it had done them. Anders n |

|

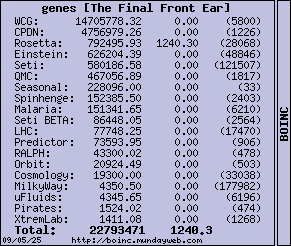

genes Send message Joined: 16 Feb 06 Posts: 45 Credit: 43,706 RAC: 20 |

I have this 5.06 WU: WATCHDOG_KILL_VERY_LONG_JOBS_447_2_1 Currently at 1.03% after 14 hours. It's not stuck, it's moving very slowly, at step 6 million and change. [edit] that's 62 million! [/edit] When is the watchdog supposed to kill it?

|

|

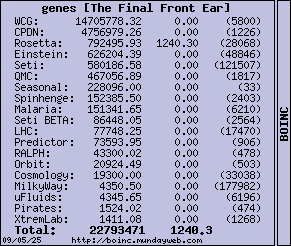

genes Send message Joined: 16 Feb 06 Posts: 45 Credit: 43,706 RAC: 20 |

Well the watchdog finally killed it after almost 17 hours. I had my run-time preference set to 4 hours. I see that the watchdog waits for greater than 4X your preferred time. I guess that answers it... Resetting my run-time preference to (not selected) so it runs whatever the default is.

|

feet1st feet1stSend message Joined: 7 Mar 06 Posts: 313 Credit: 116,623 RAC: 0 |

I see that the watchdog waits for greater than 4X your preferred time. I believe the watchdog is unaware of time as compared to your preference. It is looking at a number that isn't shown on the graphic called the Rosetta score. This score is essentially a single simple representation of how good the current model is looking. As the model evolves, the scores are constantly changing. The watchdog looks for WUs where their score doesn't change for several trials. I'm not clear if it checks every start of a model, or every checkpoint, or what. But if your score goes unchanged for an abnormally long time (note how vague that statement can be on fast vs slow machines, and small vs large WUs), then it steps in to end the WU. In other words, watch dog "knows" we should have seen some change by now, and since we have not, it ends the WU. You can start to appreciate how tricky that was to get the right balance. That is likely why it took more than one attempt to get it right. The LAST thing you want to do is cancel a huge WU on a slow machine just because it's taking a long time to make progress. |

Message boards :

Current tests :

Continue crunching 5.06 Ralph??

©2026 University of Washington

http://www.bakerlab.org